Dr. Dolittle AI

Dolphins join the chat … Take a selfie on the Titanic … And the scam-catcher caught “himself.”

Humans have wanted to talk to animals since forever — from teaching apes sign language to coaxing dogs to say “I love you” (sort of).

But artificial intelligence is flipping the script. For the first time, we don’t have to force animals to learn our language. We can learn theirs.

A whole lot is happening in the strange and delightful world of AI-powered interspecies communication, so let’s dive right in.

Talking to Flipper

Just this week, Google unveiled DolphinGemma, an AI model designed to help people understand those squeaks and clicks that dolphins make.1

It’s basically ChatGPT, but for talking to dolphins.

The model was trained on decades of underwater audio and now can identify recurring vocal patterns, which basically amount to dolphin “words.”

But that’s not all. DolphinGemma can chirp back in kind, using waterproof Google Pixel phones.

And soon the tech will be open-sourced, which could make it a lot easier for researchers to build their own aquatic Duolingo.

For now, DolphinGemma only allows for rudimentary back-and-forth conversations.

But who knows? Maybe in a year or two, you’ll be able to have full-on conversations with dolphins on your vacation.

You’ll finally be able to tell them they’re actually swimming in the Gulf of America (just kidding).

Bird watchers are going to love this

Understanding animals isn’t just a gimmick to sell Google Pixel phones, at least not entirely.

It’s an enormous undertaking aimed at changing how humanity understands its role in nature.

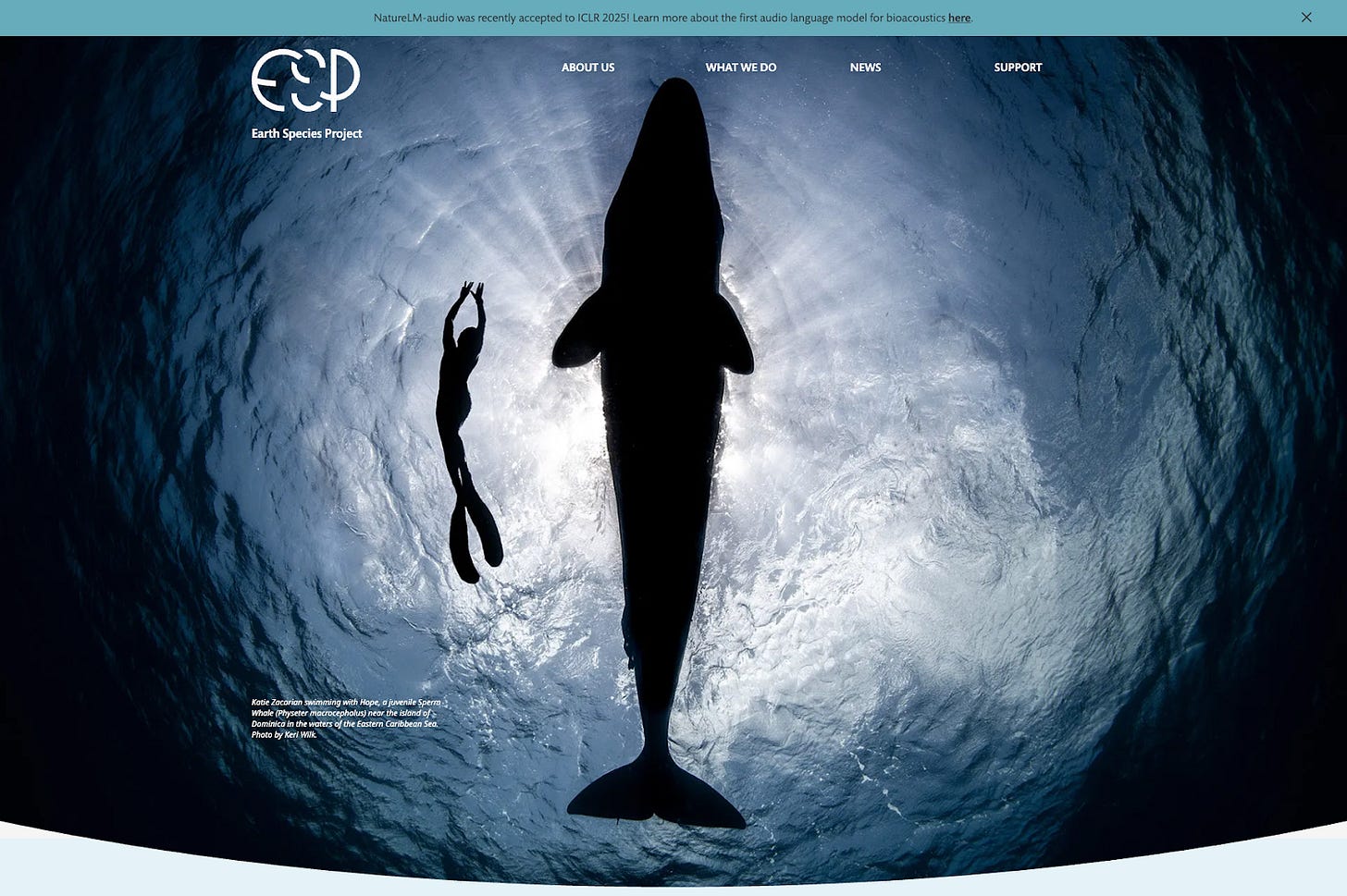

One of the biggest players is the Earth Species Project, a nonprofit with big philanthropic backing. They’re gathering the sounds of virtually every species on Earth.

Pretty soon, when you wake up and hear birds singing as the sun rises, you’ll be able to know how many are singing, which species they belong to, and most astonishingly, the gist of what they’re saying.

In the next few months, the Earth Species Project will release NatureLM‑audio as free, open‑source software.

That means anyone — from backyard birdwatchers to professional conservationists — will be able to download the code, tweak it, and build tools that “translate” animal sounds into plain English.

It’s all going to be at your fingertips before you know it.

Listening to everybody

The team at the Earth Species Project collected millions of sound clips — each labeled with the species and behavior it represents — and taught the system to discover its own patterns.

Instead of juggling separate programs for birds, whales, bees, and frogs, NatureLM‑audio learns from them all at once.

After the model is trained, you simply enter a short description of what you’d like to know, and NatureLM‑audio does the rest.

Identify the species: Record a chorus on your phone, and within seconds get a list of who’s singing.

What are they saying?: Upload any clip to learn if it’s a mating call, an alarm signal or a mother telling her young to behave.

How many are out there?: Instead of tediously counting each animal “speaker,” NatureLM‑audio can estimate how many frogs or birds are in a recording.

Detect emotions: Find out if animal calls sound stressed, relaxed, curious or territorial.

Do your own thing: Plug in just a handful of your own labeled clips to teach the model new tasks. No need for massive datasets.

In short, you’re going to be able to interact with wildlife in much the same way as biologists and data scientists. You’ll be your own eco-storyteller.

Like a lot of advances with AI, the possibilities seem endless.

Teachers could create classroom demos to show students real animal conversations.

Hikers could use apps that tell them in real-time whether that grunt they heard was from a dangerous animal or their newest furry friend.

Conservation groups could use automated monitoring systems to keep an eye (or ear) on fragile habitats around the clock.

Citizen‑scientist communities could crowdsource data collection and analysis, turning casual nature lovers into active participants in wildlife research.

And who knows what else people will come up with once they get their hands on the new tech.

So get ready, it’s gonna be wild!

If you want to see what happens next with AI, all you have to do is click that button and we’ll give you the skinny every week.

Speaking of animals, ChatGPT can now turn your fur-babies into real babies.

A famous YouTuber who catches Crypto scammers just caught scammers who used his own AI-generated face in their scheme.

This one is really mind-blowing. You can put yourself in all sorts of historical moments, from the deck of the Titanic to riding with Genghis Khan.

Made in America: NVIDIA is trying to move the heart of AI — its advanced chip manufacturing — entirely to the United States, the company announced this week. They’ve already started producing chips at a factory in Phoenix. Now they’re planning to start packaging and testing chips in Arizona, as well as build AI supercomputers in Texas. Overall, the company plans to invest $500 billion in AI infrastructure in the U.S. over the next four years.

Talking AI: Business executives are showing a keen interest in AI, spurring the Phoenix Global Forum to choose AI as their topic for a panel discussion last week that touched on robot coworkers, the tricky ethics of using AI, and other topics, Axios’ Jessica Boehm reported (and also moderated the discussion). One company executive said AI helped them boost their productivity by $350 million annually. Researchers at Columbia University went even further at their inaugural AI Summit, where they looked at AI through the lenses of sociology, public health, and other disciplines.

AI’s doing police sketches now: Goodyear police just used AI to generate a forensic sketch of a suspect in an attempted kidnapping case, 12News’ Gabriella Bachara reports. Police took a hand-drawn sketch and fed it into ChatGPT, which spit out an image that was so realistic “it made my jaw drop,” sketch artist and Goodyear Police Office Michael Bonasera said. He isn’t worried about AI taking his job; it’s actually going to help police dig into more cold cases and generate new leads, he said.

Cook’s obsession: Apple CEO Tim Cook is reportedly "hell-bent" on launching industry-leading augmented reality (AR) glasses before Meta, with insiders claiming he "cares about nothing else" in product development, Bloomberg reported. Cook is hoping to establish a new product category that could eventually replace smartphones as the next major platform.

Looking to the stars: Astronomers are using a machine-learning algorithm to find planetary systems that could be humanity’s next home, Space reports. So far, researchers at the University of Bern in Germany have identified 44 systems that are “highly likely” to include Earth-like planets.

For those about to rock: The French streaming platform Deezer said 18% of uploaded songs are fully generated by AI, Reuters reported. An executive at Deezer, which has nearly 10 million subscribers, said “we see no sign of it slowing down.” They launched a detection tool in January to filter out AI-generated songs, as artists increasingly are filing lawsuits accusing AI firms of stealing their copyrighted material.

April 14, the day Google unveiled DolphinGemma, also happens to be National Dolphin Day.

Particularly for the case of dolphins, whose play behavior is complex, how are targets for the ANN training obtained? In other words, if the click language is the input, what are the target outputs used in order to train the translation system?