Talking "heads"

Meet the future of government relations … Deepfake explosion … Every pixel counts.

“Daniel” and “Victoria” are the new faces of the Arizona Supreme Court’s public outreach team.

The thing is, neither of them is real. They’re AI avatars the state judicial branch set up last week to explain court decisions through online videos.

It’s the latest move by government officials, both here in Arizona and around the world, to insert AI avatars into the relationship between officials and the public. And judging by how quickly AI technology is developing, you can bet on seeing these “reporters” get even more sophisticated over the next few years.

So far in Arizona, the court system is moving much faster than any other branch of the state government (and maybe faster than any state court system in the country).

They launched an AI committee last year, saying AI presents “unprecedented opportunities and challenges” that could improve how courts “process cases, streamline workflows and analyze legal information, and impact decision-making.”

Meanwhile, other state officials are bogged down in trying to plan everything, before they actually do anything.

The Secretary of State’s Office launched a committee last year, but other than a big report, they haven’t done anything substantial. Gov. Katie Hobbs launched a steering committee just two months ago.

The Legislature also is starting very slowly, as we pointed out in January. Lawmakers introduced just a handful of bills related to AI in the past two years. Meanwhile, lawmakers in dozens of other states are busy dealing with all the wild new ways AI affects people’s lives.

But Arizona’s courts are ready to “get out of our comfort zone and serve the public,” as Chief Justice Ann Timmer put it to 12News.

“It’s a little bit time for us to be our own media, to an extent,” she said.

Who else is doing it?

While Arizona officials are just getting started, governments around the world are way ahead of the game.

There’s even another “Victoria” running around. Ukraine launched “Victoria Shi” to speak on behalf of their Foreign Ministry last year.

The avatar is modeled after a former contestant on the Ukrainian version of The Bachelor. The way it works is Ukrainian officials write statements about consular services or emergency situations abroad and feed those statements to the AI, which then uses the avatar to read them to online audiences.

In a similar vein, Ukrainian officials are using the blonde “Ada” avatar to help citizens access social services, including in Russia-occupied territory where the Ukrainian government no longer has physical offices.

Check out this report on the Ukrainian AI reporter, done by an AI avatar from India Today.

A wild scenario is playing out in Venezuela, where news reporters use AI avatars to remain anonymous and stay safe, while the government uses AI avatars to spread misinformation.

Venezuelan reporters have good reason to use avatars instead of their own faces. After the presidential election last year, at least six reporters were jailed during a government crackdown on nationwide protests.

In response, a group of Venezuelan media organizations launched "Venezuela Retweets," a news show featuring two AI-generated anchors named "La Chama" (The Girl) and "El Pana" (The Dude).

“Right now, being a journalist in Venezuela is a bit like being a firefighter,” Carlos Eduardo Huertas, a Colombian media operator who coordinated the launch of the news show, told CNN. “You still need to attend the fire even though it’s dangerous. The Girl and The Dude want to be instruments for our firefighters: we don’t want to replace journalists, but to protect them.”

The flip side of that coin is Dan Dewhirst. He’s a British actor who made some extra money during the pandemic by letting the AI company Synthesia use his likeness to generate an avatar.

It seemed harmless at the time, but then the Venezuelan government started using an avatar based on Dewhirst to spread propaganda in English (with a U.S. accent, not British), claiming that Venezuela’s economy isn’t actually in shambles, VICE reported.

How we got here

Basically, these AI avatars are the result of “deepfake” technology making dynamic leaps forward.

If you were following politics in 2016, you probably remember when Buzzfeed made a deepfake video of then-President Barack Obama and it went viral.

The tech has only gotten better since then. Last year, our sister newsletter, the Arizona Agenda, made one of Kari Lake, complete with lawyer-approved disclaimers that it wasn’t real.

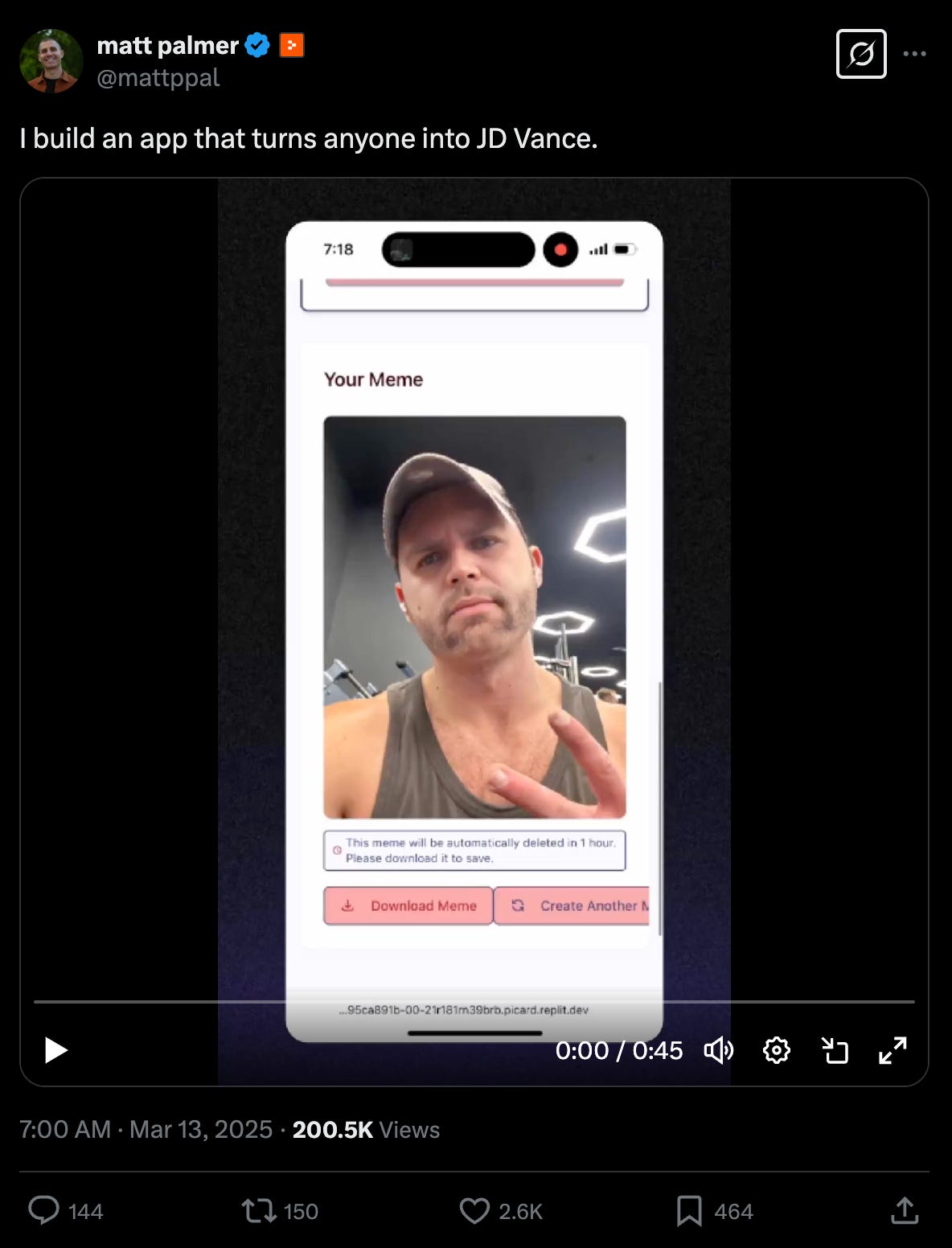

Now look at these deepfake videos we made in just a few minutes this week:

See how much better they’re getting? And they’re only going to get more realistic and easier to make.

Where we’re going

For now, AI avatars are usually just reading a script. Soon they’ll be writing that script, and communicating on your behalf.

And it won’t be just one “god-level” AI doing it all. What is more likely is that there will be billions of specialized agents just like an AI spokesperson, an AI reporter, or an AI executive assistant. And these will come and go.

These agents are going to talk to each other. In fact, it's happening now, thanks to Anthropic’s latest protocol, which teaches agents to talk to each other directly without human input.

So imagine a court system where your assistant AI talks to the judge’s AI reporter and the court’s AI spokesperson and then reaches out to your attorney’s AI bot and finally comes back to you with the implications of your case.

This is where we’re heading, and in fact we’re already in it.

In the meantime, everybody can look like Vice President JD Vance as they lift weights.

Nuclear steps: An Arizona Senate committee approved a bill last week that would clear the way for data center companies to build small nuclear reactors, the Arizona Mirror’s Jerod MacDonald-Evoy reports. Republican lawmakers accounted for all the “yes” votes in the House earlier this year and on the Senate committee last week. They’re hoping to get ahead of other states, like Utah, and other countries that want to use nuclear reactors to supply the tremendous amounts of energy that data centers will need during the AI boom. As we mentioned last week, Big Tech companies like Microsoft and Google are taking an even bigger swing at nuclear energy for AI.

AI tutors: Several Arizona school districts are using an AI pilot program to help tutor students, ABC15’s Elenee Dao reports. The program was developed at Khan Academy and is known as Khanmigo (which sounds a lot like “conmigo,” or “with me” in Spanish). Students can log into the program whenever they like and ask questions to an AI chatbot, which should guide them, but not give them the answers outright. The program has the support of Superintendent of Public Instruction Tom Horne, who set aside $1.5 million to cover the cost for 100,000 students to use the program.

Pumpkin eaters: A high school student in New Jersey used ChatGPT to answer math problems, pass a take-home test, and do her work for a science lab, the Wall Street Journal reports. But after a year of using it to cheat, she decided to stop and “use my brain” instead. Students are the most common users of ChatGPT, out of 400 million people who use it every week. While educators say AI can help in the classroom, students are often left on their own to decide whether to use it as a tool or to cheat. And researchers are just now getting around to figuring out how prevalent AI-facilitated cheating actually is.

“This is a gigantic public experiment that no one has asked for,” said Marc Watkins, assistant director of academic innovation at the University of Mississippi.

Next question: The conversation about AI in classrooms usually revolves around students using AI, but two pharmacy professors at the University of Arizona are taking a different approach: They recently conducted a study to see if ChatGPT could help them write exam questions. They’re running into a similar problem as most people who try to use AI for creative work. AI isn’t great at it yet.

“We found about half of the questions were decent,” Prof. Christopher Edwards said. ”They could be used right away with some minor revisions by the instructor so that those questions could probably be used on an exam or quiz.”

Taking it to the next level: If you want to get a coherent view of how U.S. officials are dealing with the possibility of artificial general intelligence (basically, an AI that is better than humans at everything), check out this discussion on the Ezra Klein Show. A main takeaway from the New York Times’ political commentator’s hour-long show is that they’re expecting AGI to emerge a lot sooner than you think.

Cyber Bay: The University of South Florida just got a $40 million gift to create a college of artificial intelligence, cybersecurity and computing. It is the first named college in the country “dedicated exclusively to the convergence of AI and cybersecurity,” university officials said. The goal of the gift from ConnectWise co-founder Arnie Bellini is to position the Tampa Bay area as “cyber bay.”

Mirage is the first AI model built to generate ultra-realistic talking videos without actors, or pre-recorded footage. With just an audio file, script, or prompt, you can generate talking videos with people, objects, and background environments that don't exist.

Previous models had large amounts of pre-recorded videos or actors' videos that were used to “lip sync”. But now everything from body language to eye contact is generated from scratch and is almost perfect.