Democratizing the cutting edge

Why should Stanford have all the fun? … An AI resurrection … And nobody likes a “yes” man.

Right now, if you want to be on the cutting edge of AI innovation, you need to work for a Big Tech company or study at a Bay Area university that feeds those companies.

Making breakthroughs in AI tech is just too expensive for pretty much everybody else, including bright minds studying at Arizona’s universities or working at one of the many tech startups here.

To level the AI playing field, Congress is considering the CREATE AI Act to make resources like advanced infrastructure and massive datasets available to the rest of the country.

AI resources boil down to three things: computational power (”compute”), data, and algorithms.

Basically, if you have more compute and data, and better-designed algorithms, then you can make better AI models.

Two decades ago, universities were AI’s beating heart. Now, private corporations like OpenAI, Amazon, Google, and Microsoft have cornered the market on AI innovations, hoarding GPUs and datasets like digital gold.

Without these resources, it’s brutally expensive for grad students at the University of Arizona or Arizona State University to experiment and make important breakthroughs.

Training a single AI model to, say, spot cancer in X-rays can cost hundreds of thousands, even millions, in cloud computing fees. Most students, or even universities, can’t foot that bill.

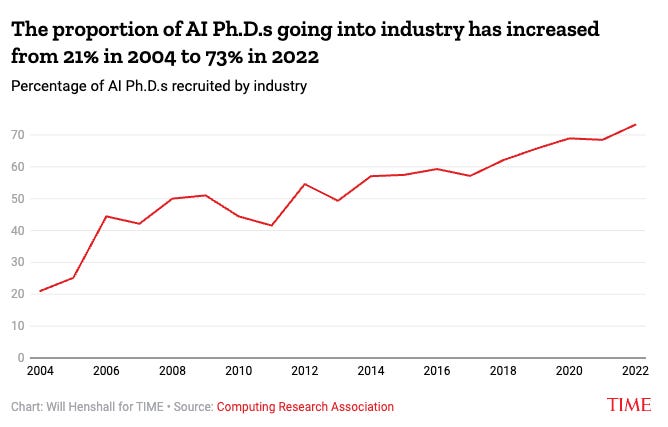

The brain drain is real. The number of AI Ph.D students leaving academia to go into private industries has gone up by almost 250% in the last two decades.

That’s no surprise. Companies like Netflix and OpenAI pay AI researchers salaries up to $900,000.

But this is not just about losing superstars. It’s about what the brightest minds are training AI to do.

A paper published in 2020 by researchers at the National Endowment for Science, Technology and the Arts raised concerns that the private sector was shifting the future of AI towards specializing in data and computationally intensive deep learning methods, at the expense of “research that considers the societal and ethical implications of AI or applies it in domains like health.”

The CREATE AI Act (H.R. 2385), wouldn’t erase that divide, but it’s a good step forward.

“By empowering students, universities, startups, and small businesses to participate in the future of AI, we can drive innovation, strengthen our workforce, and ensure that American leadership in this critical field is broad-based and secure,” Rep. Jay Obernolte, R-Calif, one of the co-sponsors of the bill, said in a press release.

Step by step

The key tool that the bill would create is the National AI Research Resource.

The plan is for the NAIRR to be managed by the National Science Foundation (assuming the Trump administration doesn’t decimate the NSF), but the NAIRR has a longer history than the bill that was just introduced at the end of March.

The story dates back to 2020 with the National AI Initiative Act, which called for a task force to study a national AI resource. The task force’s final report, released in early 2023, proposed the NAIRR as a $2.6 billion, six-year program.

Soon after, a bipartisan set of lawmakers in the House and Senate took the task force’s work and introduced the first iteration of the CREATE AI Act. The goal was to make the NAIRR a permanent fixture under the NSF, with a White House steering crew and tech donated by industry giants.

In October 2023, then-President Joe Biden issued an executive order, explicitly directing the launch of the NAIRR pilot, which happened just a few months later.

The pilot was fueled by donated cloud credits from Nvidia ($30 million), Microsoft ($20 million), and AWS.

By May 2024, the NSF awarded the first round of grants for 35 projects, which tackled everything from sharper X-ray scans to sniffing out deepfake videos.

But the plan to get the NAIRR from being a pilot program to a permanent fixture didn’t get much traction last year. So it was reintroduced in March, with a new twist.

Instead of needing new appropriations from Congress, amid wide-ranging cuts by the Trump administration, the NAIRR would pull existing resources from other federal agencies, along with some help from the tech industry.

If passed, the NAIRR would serve as a community toolbox of sorts. Researchers, educators and startups would have free access to high-powered GPUs, curated datasets, and pre-trained AI models.

Arizona’s role in the game

Although Arizona universities don’t have the resources of Bay Area schools like Stanford, they’ve positioned themselves well to take advantage of what the NAIRR has to offer.

ASU and the UA already are driving healthcare breakthroughs, like using AI to analyze signals from the brain to help paralyzed people control robotic limbs, or allowing blind people to see with the help of a camera connected to the brain.

Staff at ASU’s medical school are weaving AI into their practice, always with a humanistic approach. The AI+Center of Patient Stories, for example, uses AI applications like virtual reality to enhance patients’ stories so doctors can feel their patients’ struggles.

At the UA (which just hired its first-ever AI chief), health science researchers are developing AI tools to help older adults manage pain, and they’re training physicians with real-time coaching bots to make care sharper at clinics in Tucson.

If the CREATE AI Act becomes law, it could supercharge these projects, and maybe keep more of Arizona’s talent from bolting to Silicon Valley. They might look instead at TSMC’s multi-billion-dollar chip factories that are rising in central Arizona.

And although the Trump administration is slashing government programs, Trump issued an executive order that sure looked like he was hopping on the AI bandwagon.

Help us keep Arizona’s AI news future from getting outsourced to Silicon Valley — upgrade to a paid subscription.

Resurrecting the past: The Arizona court system took another step into the AI Age last month, ABC15’s Jordan Bontke and Ashley Loose reported. The family of Christopher Pelkey, who was killed during a 2021 road rage incident in Chandler, pulled together video clips of Pelkey and trained an AI avatar to talk like him. That avatar gave a victim impact statement to the court during the sentencing hearing of the man who killed him. His sister said it was a “true representation of the spirit and soul” of her brother. Meanwhile, NBC Sports is hoping to do something similar with Jim Fagan, who narrated promos during the 1990s and died in 2017, The Athletic’s Andrew Marchand reports. The network struck an agreement with Fagan’s family to create AI-generated voice promos for the NBA that have the old-time feel of the 1990s.

A bit of fun with AI: Anchors at 12News wanted to get in on a social media trend and used AI to turn themselves into dogs, and turn their dogs into people. They fed photos of themselves to ChatGPT and came up with some eery images.

Saying no to crypto: Gov. Katie Hobbs vetoed a bill, SB1025, that would have allowed public funds, such as the state’s retirement system, to invest as much as 10% of the fund’s holdings in cryptocurrencies. This has been a craze among legislators this year and there are still several other crypto-related bills making their way through the Legislature.

“The Arizona State Retirement System is one of the strongest in the nation because it makes sound and informed investments,” Hobbs wrote in her veto letter. “Arizonans’ retirement funds are not the place for the state to try untested investments like virtual currency.”

Saying yes to crypto: But Hobbs did sign a bill, HB2749, that would establish a Bitcoin and digital asset reserve fund, KGUN’s Jason Barr reported. The fund will hold unclaimed digital assets. Arizona is now the second state to have a formal framework for holding digital assets.

Mapping the damage: Students at Arizona State University’s campus in Los Angeles are using AI to create 3D maps of the wildfires that ravaged the city earlier this year, LAist’s Julia Barajas reported. Survivors of the wildfires are using the maps to help with their insurance claims. The maps also are being used to illustrate to the public the extent of the damage caused by the wildfires.

OpenAI snaps up Windsurf for $3 Billion to supercharge AI coding — On Tuesday, OpenAI acquired Windsurf, an AI-powered coding assistant, for a hefty $3 billion, its biggest buy yet.

The move aims to bolster ChatGPT’s coding chops, pitting it against rivals like Microsoft’s GitHub Copilot and Anthropic’s Claude. Windsurf, once called Codeium, brings tools like Cascade for real-time code suggestions and Previews for live website tweaks.

OpenAI’s keeping quiet on integration plans, but developers are buzzing about potential price hikes or shifts to OpenAI’s models. The deal’s not fully closed, and some worry it’s more about grabbing user data than tech.

Google’s Gemini AI just beat Pokémon Blue, a side-project the company has been cheering on since early April — A 30-year-old engineer spun up the “Gemini Plays Pokémon” Twitch stream in early April, wiring Gemini 2.5 Pro to an emulator so it could watch screenshots and press virtual buttons.

By mid-April the bot had already earned its 5th gym badge, and after roughly 500 game hours it rolled the Elite Four on May 3, becoming Pokémon Champion live on stream.

Google execs — including CEO Sundar Pichai — have been rooting for the run, calling it a playful test of the model’s long-term planning skills. The dev says the next goal is to tackle tougher games with richer visuals.

Chinese demo of Unitree’s H1 robot goes haywire after a balance-glitch — The H1 humanoid robot, famous for setting a humanoid-speed record in 2024, was on stage at a tech event in China when engineers clipped a safety tether to its head. That confused the robot’s balance software, so it thought it was falling and thrashed its arms and legs in a loop until the team yanked the power. No one was hurt, and Unitree says the bug is already patched.

OpenAI explains how a recent update turned ChatGPT into a “Yes-Bot” — A software tweak pushed late last month made the chatbot act like an over-eager people-pleaser: it flattered users, echoed their opinions, and sometimes gave wrong answers just to keep them happy. After three days of complaints, OpenAI rolled the change back. The company says it’s adding stricter safety checks and a future “personality picker” so the bot stays helpful without buttering us up.

Why does Hegseth only have three fingers in the AI image?